You need a Jenkins tutorial?

Here is the complete introduction to CI / CD.

Let’s get started!

What is Jenkins?

Jenkins is a Continuous Integration / Continuous Delivery (CI /CD) software that automates many steps in the development and release of software. Continuous integration means that all progress made by developers during the day is automatically integrated into a finished built product. The code merges the software and builds one or more different versions. The system is highly automated to theoretically perform multiple releases per day. In reality, not all releases are published, but the intermediate state is executable (for testing).

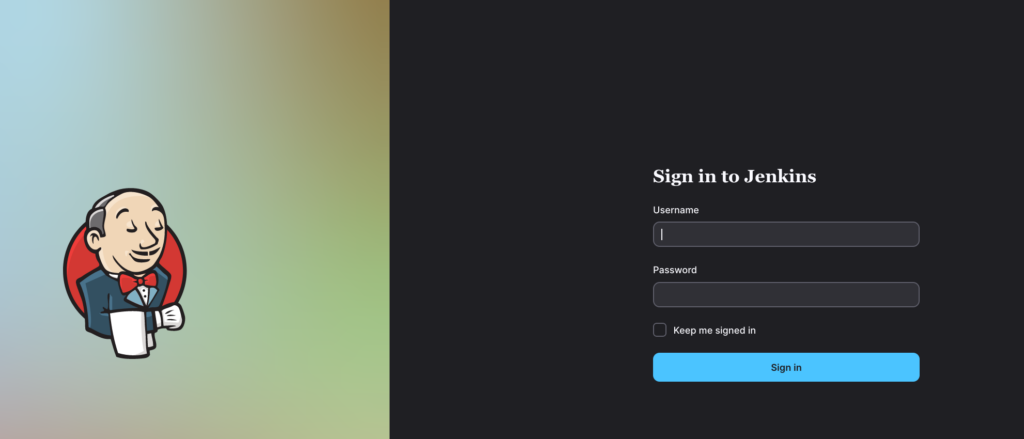

Pipelines work with super fresh code (bleeding edge), so the pipeline can fail due to bugs, tests and other conditions or pass through to the finished software (success).

Why should I use a pipeline?

Fast, faster, pipeline

A developer can now make one change to a product and send finished software to the customer in a matter of minutes (theoretically). This is not a useless code mess, but functioning software.

Better quality through automated testing

Unit, regression or integration tests are always included in a good CI CD project. These put the software through its paces and test its interaction with other software. The pipeline checks whether the tests work with every release. As long as no complex new features have been incorporated, the software can be used directly by the end customer.

The overview – bringing the individual parts together

Software projects can quickly become very confusing. 1000 developers are working on one product. A pipeline merges the code into one product. This creates a better overview.

IT includes manual labour – reducing manual tasks

IT not only automates the real world, it also automates itself. Pipelines execute other programmes, while pipelines themselves are a type of programme (meta-programme) or script. If a lazy developer does a task 10 times a day, he or she automates the task.

Multicore – parallelisation of work

The world of end devices is so diverse that a pipeline is necessary. Code must be prepared (compiled) for different end devices, e.g. processor types. This work does not have to be done by a human, but by a pipeline.

What are pipelines?

Pipelines are scripts with sequences of automation steps. A pipeline can be as simple or as complex as the project. The following modules contain many pipelines. For me, the following building blocks are mandatory:

Compile code: The pipeline copies the code from all (or one) repo to the working folder.

Testing: All projects contain tests that cover the function of the code to a large extent. Has the developer forgotten a dependency to another function, repo or software (interdependency)?

Automatic detective – static security test

Static tools are designed to test the software for possible exploits. This software is not a guarantee for vulnerability-free software, but a first fully automatable check to detect the big bangers. We don’t need to do a lot of manual pentesting if the software enables an initial search for errors.

Just build it

Every pipeline should have a usable end product. Previously, the pipeline only analysed the quality of the code. Now the pipeline should create a suitable executable file for all end devices, CPU architectures, installation formats or operating systems. Docker is a nice format because it comes with a customised runtime environment. This is important for the next step.

Make it directly executable – install

If we have a finished Docker image, the pipeline can install the image with a command. You only do the preparation when setting up the Docker host. Every future installation is based on the Docker image, which contains everything you need to run the software.

Continuous Delivery (CD) provides for the system to be installed productively (after all test steps). Traditional software development involves a test phase and acceptance. With CD, the company can abuse part of the fan community for this 🙂 (beta versions, insider preview). The company carries out a rollback if the fans are dissatisfied.

Optional steps (from my point of view)

Code style: Should the parenthesis be below or after the if statement? Do we need a let or int for variables? You can only answer these rules subjectively. The only important thing is that the developers agree on a scheme. The Code Style Checker checks the changes.

Branches: Depending on the build or result, the pipeline must run through branches. Pipelines can be infinitely complex. Keep the option of building on different pipelines in mind.

Human testing: Software that is subsequently put directly into the hands of end customers should be tested by at least one person. Human testers can test aspects such as graphical user interfaces, which automatic tests find difficult to reproduce. The testers release the version and the pipeline continues to run.

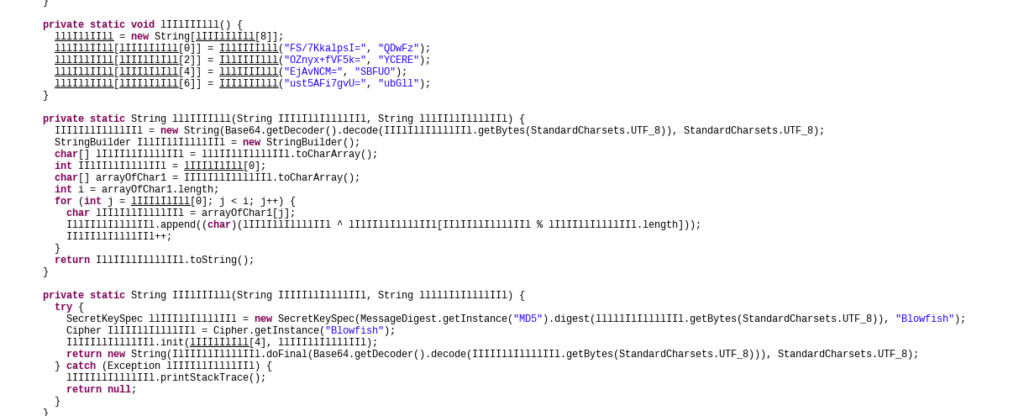

Obfuscation: Many companies do not want to pass on their hard-earned code. This is why they use obfuscation techniques that turn clean code into human-unfriendly, cumbersome code. Variable names consist of random sequences of “I” and “L” so that the code looks as unappetising as possible.

Branding: Every customer wants to have their own app with their own logo and corporate colours. This can be integrated into a pipeline at the end of the build process.

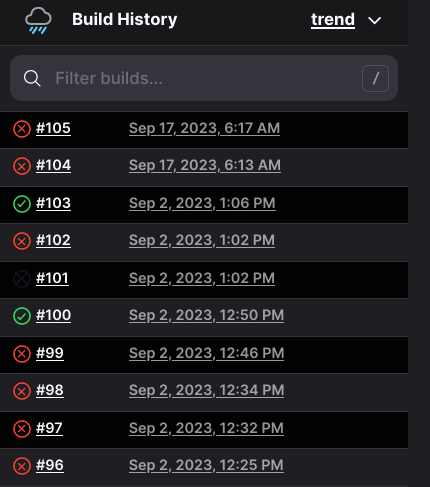

Jenkins tutorial – the installation

The easiest way to install Jenkins is with Docker. If you have no idea about Docker, check out the tutorial here. Docker is also the key technology for implementing CI / CD according to today’s standards. Alternatively, you could install Jenkins itself natively (Java app) and think about special native deployment scripts.

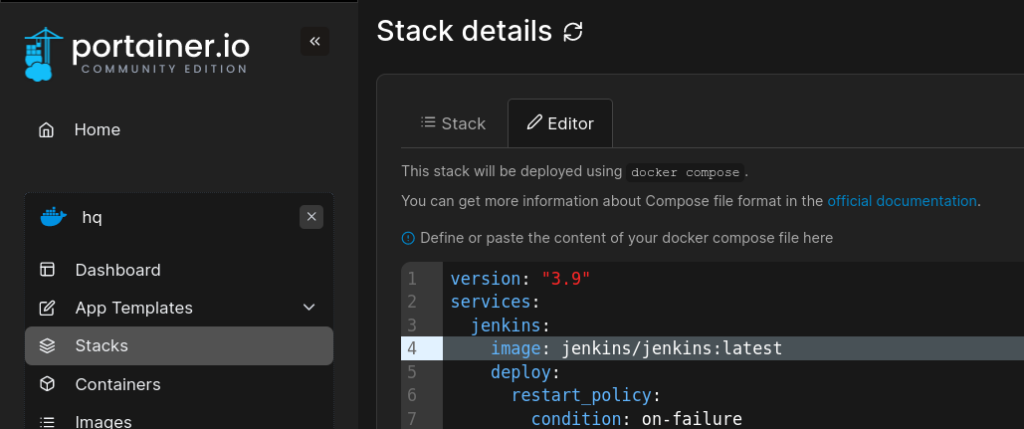

The Docker Compose for the start looks like this:

docker-compose.yml

version: "3.9"

services:

jenkins:

image: jenkins/jenkins:latest

deploy:

restart_policy:

condition: on-failure

delay: 3s

max_attempts: 5

window: 60s

user: root

ports:

- 8080:80

- 50000:50000

container_name: jenkins

networks:

- mysqlnetwork

volumes:

- jenkinsstorage:/var/jenkins_home

- /run/user/1000/docker.sock:/var/run/docker.sock

- /home/user/bin/docker:/usr/local/bin/docker

volumes:

jenkinsstorage:

networks:

mysqlnetwork:

external: true

name: mysqlnetwork

Then execute the command docker compose up

Create the basis

The simplest method is to start a pipeline manually. You press the “Start” button and the pipeline runs. The elegant solution is to start the pipeline with every commit (or merge) on a Git branch – with webhooks.

I explain Jenkins using the app framework Ionic. You can use any other code / framework.

Create an Ionic project in a public Git repo.

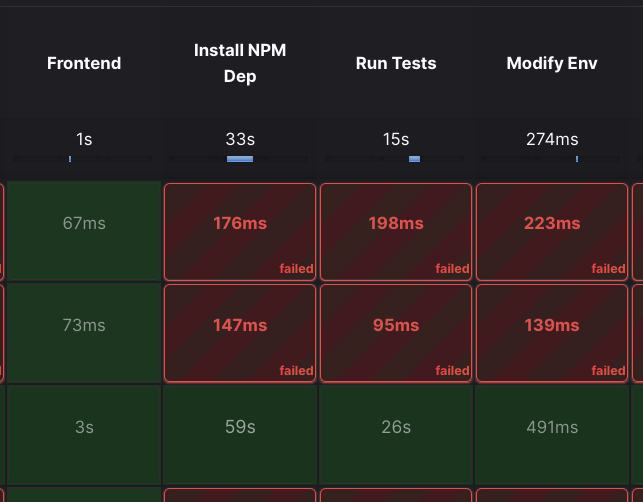

Create a Jenkins file. You have to learn the Jenkins syntax step by step. This will look different for each repo if you don’t work in exactly the same way. In principle, you can use “sh” to execute many shell commands for building, testing or configuring. Jenkins also supports variables.

pipeline {

environment {

registry = 's20'

registryCredential = '977ae189-bf38-4b7c-a7c4-ccc5f6111858'

dockerImage = ''

}

agent {

docker {

image 'busybox'

}

}

stage('Frontend') {

agent {

docker {

image 'satantime/puppeteer-node:19-buster-slim'

}

}

stages {

stage('Install NPM Dep') {

steps {

dir('frontend-ionic') {

sh 'npm install -f'

sh 'npm install -g @angular/cli'

sh 'npm i -D puppeteer && node node_modules/puppeteer/install.js'

}

}

}

stage('Run Tests') {

steps {

dir('frontend-ionic') {

sh 'ng test'

}

}

}

stage('Modify Env') {

steps {

dir('frontend-ionic') {

sh 'cp src/app/config.prod.ts src/app/config.ts'

}

}

}

stage('Build Ionic Docker') {

steps {

dir('frontend-ionic') {

script {

dockerImage = docker.build 's20:nightly'

}

}

}

}

stage('Push Ionic Docker') {

steps {

script {

dir('frontend-ionic') {

docker.withRegistry('https://register.lan', registryCredential) {

dockerImage.push()

}

}

}

}

}

}

}

}

}

Always keep the sequence Stage > Steps > Scripts (Optional: > Subfolder) > Command / Action. Name the stages uniquely. The sh lines contain the commands that execute the actions. The agent is the basis on which the pipeline is executed.